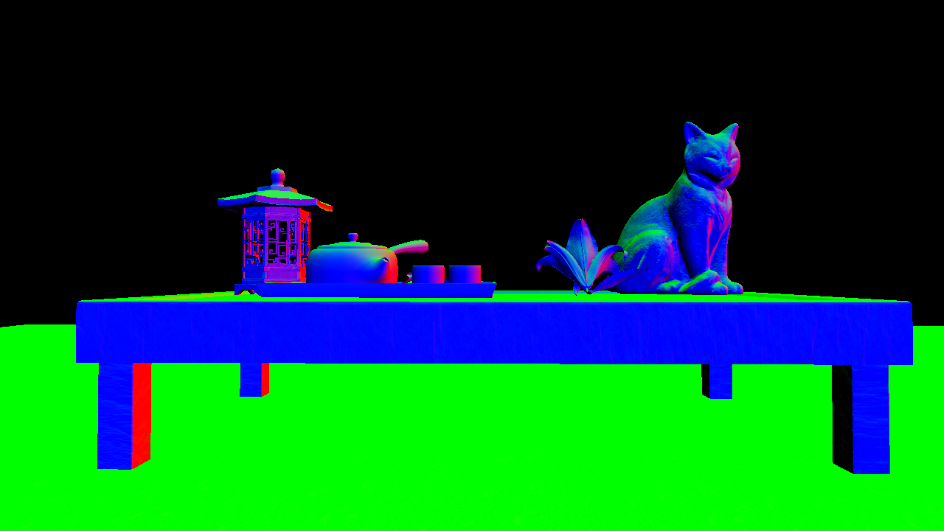

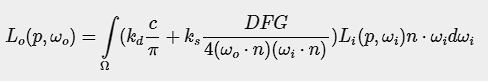

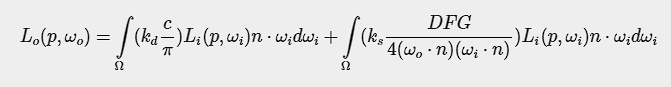

The biggest part of the programme is the laws governing the rendering system.

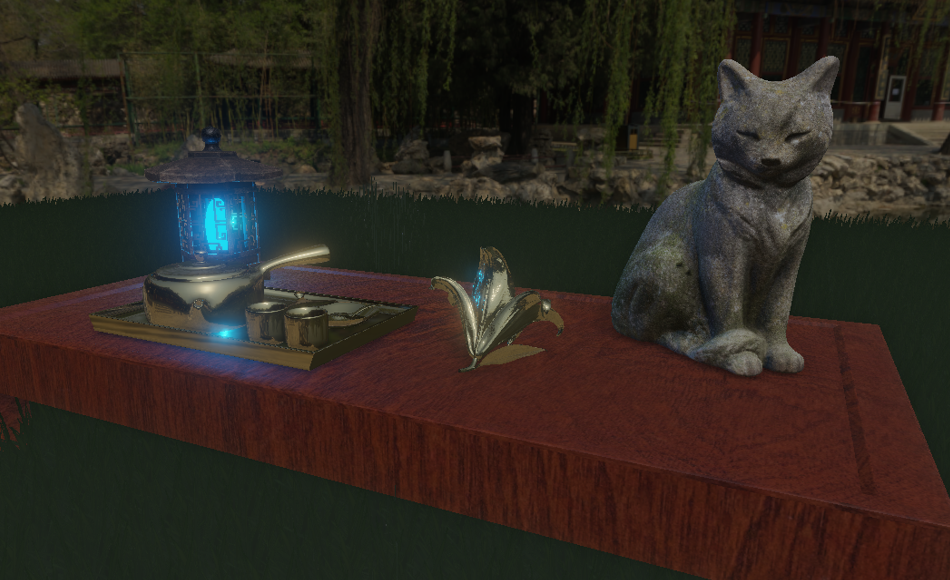

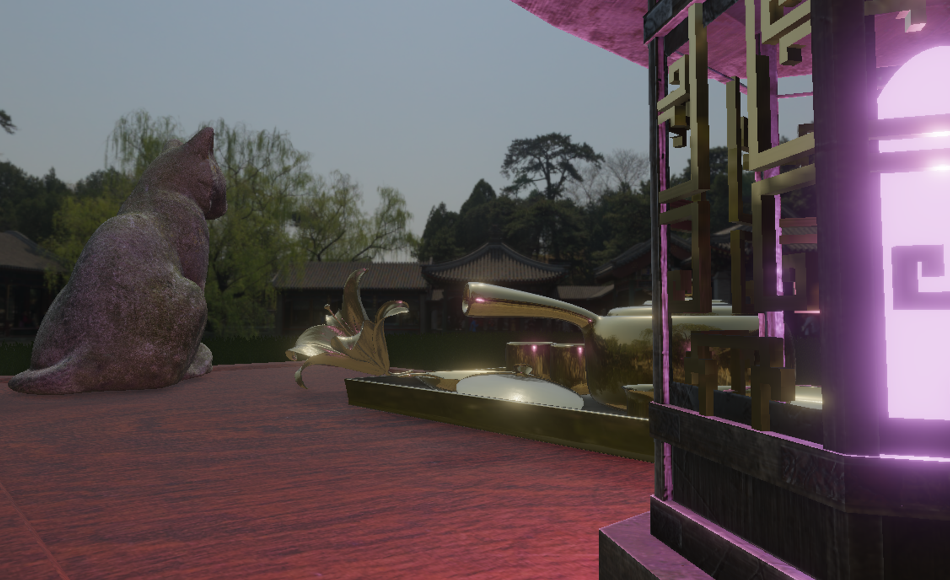

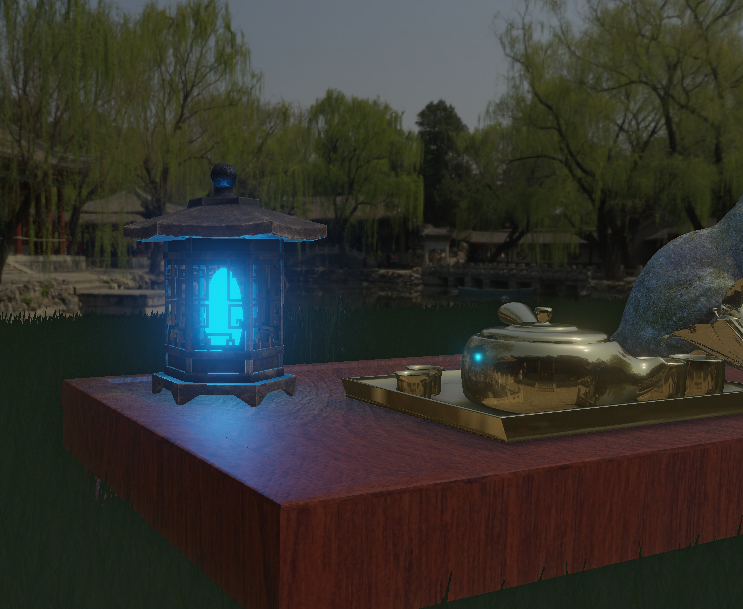

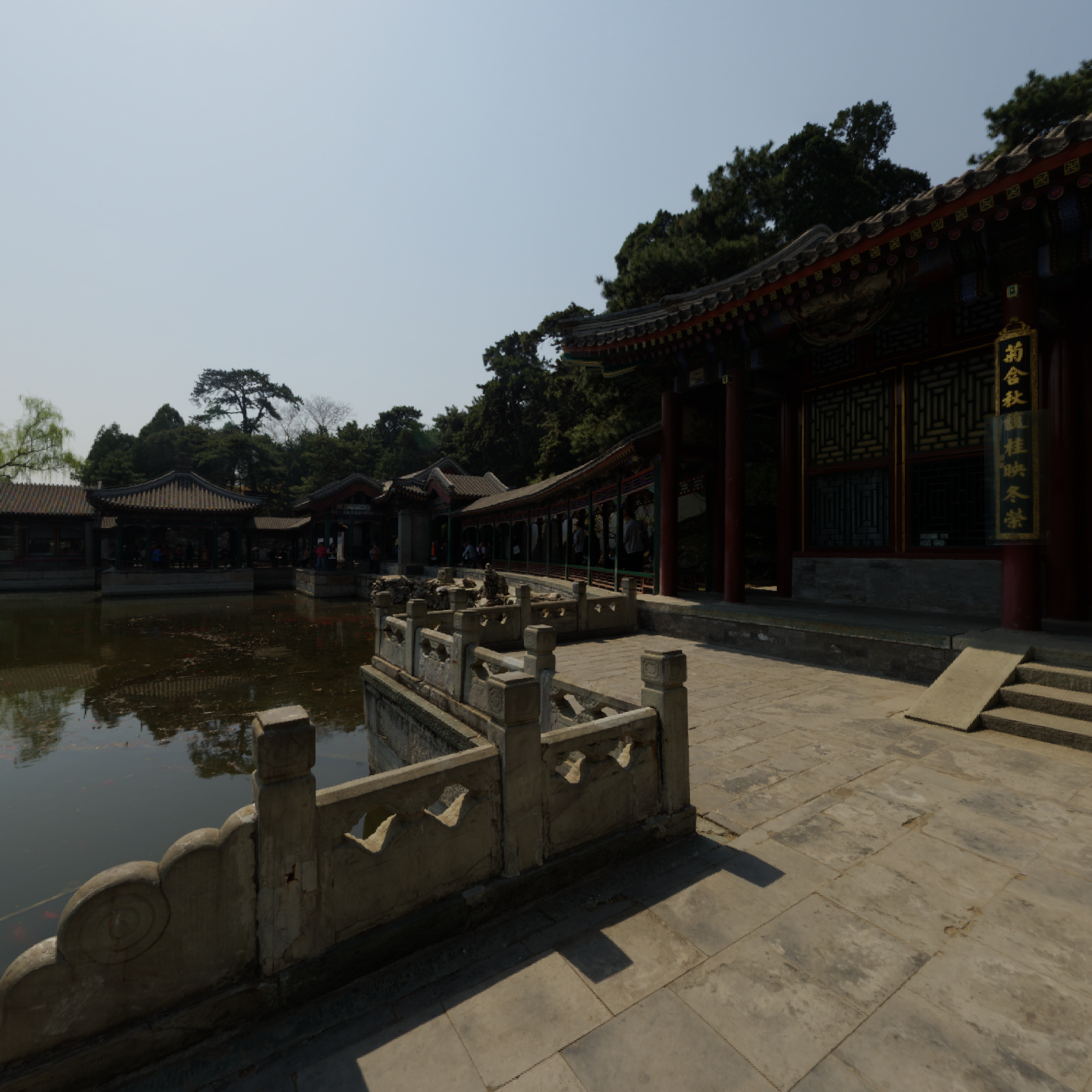

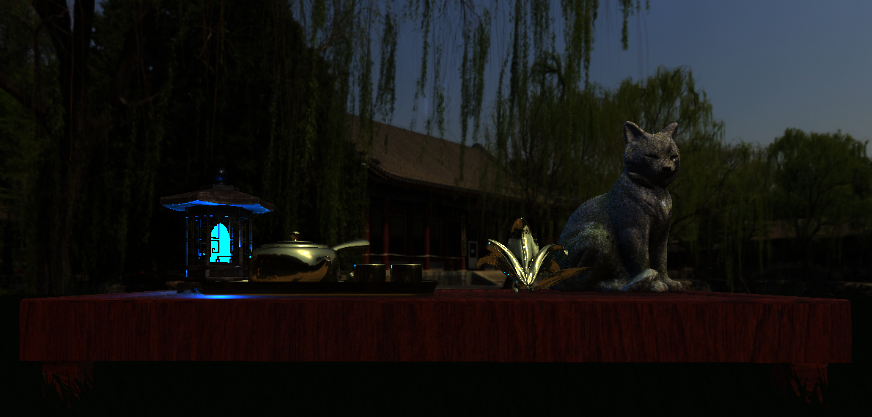

The scene uses PBR or Physically Based Rendering.

The aim is to simulate the behaviour of light on objects and their surfaces as realistically as possible.

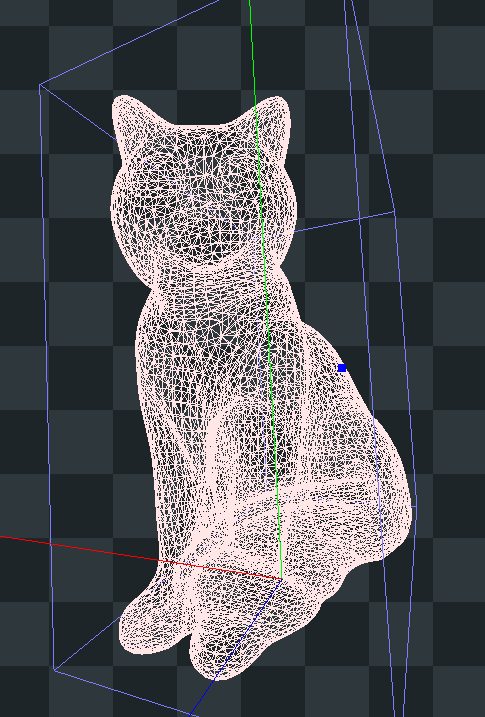

Objects have given textures to represent their behaviour when exposed to light.

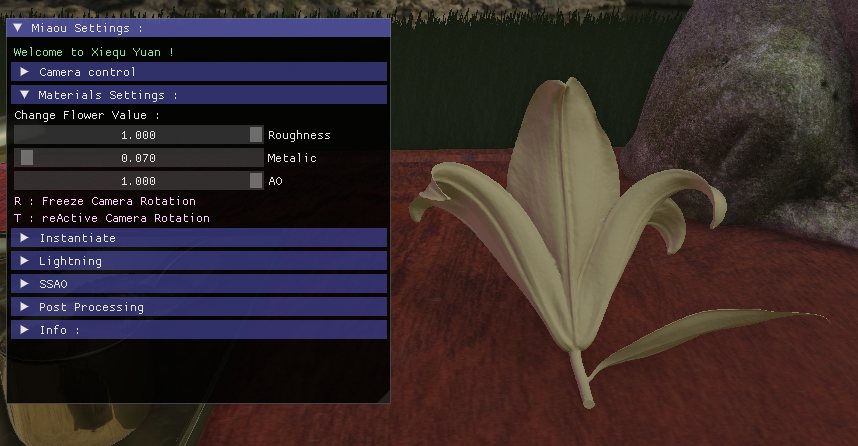

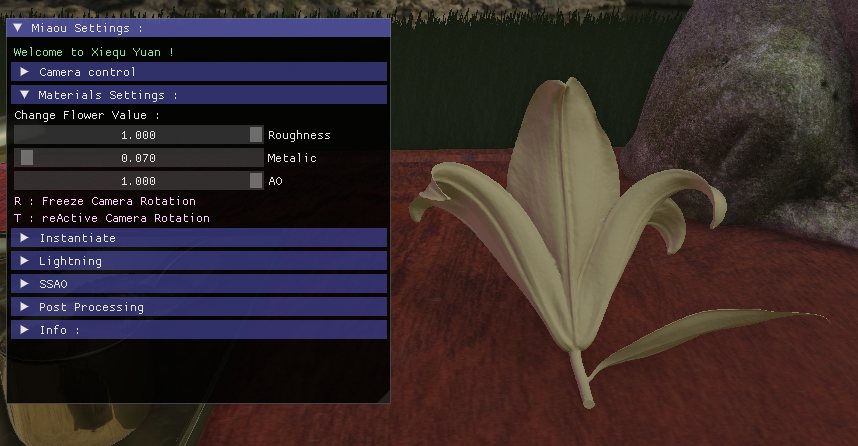

Roughness: the higher this value, the more light is diffused in multiple directions. A low value corresponds to a smooth surface.

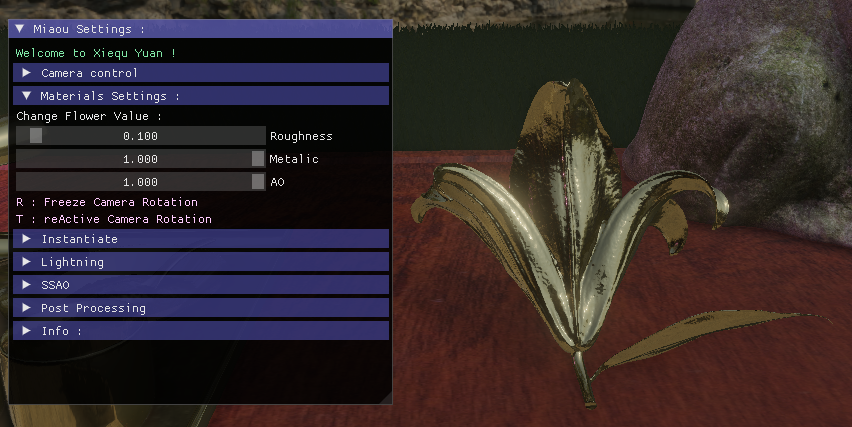

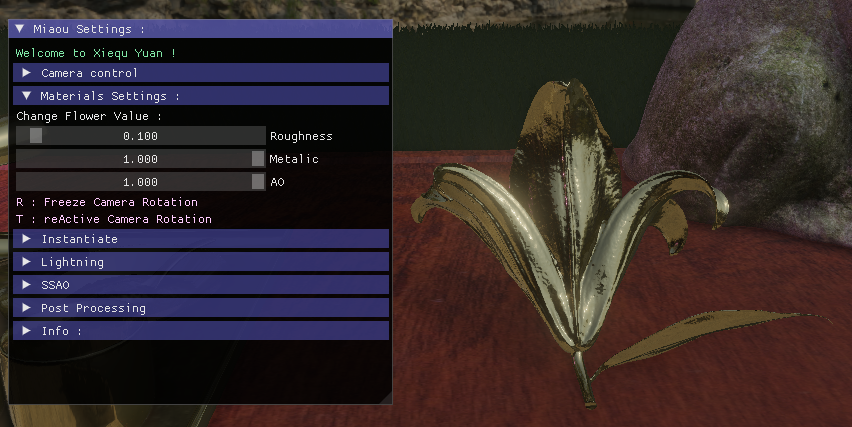

Metalic: the higher the value, the more the object will reflect light.

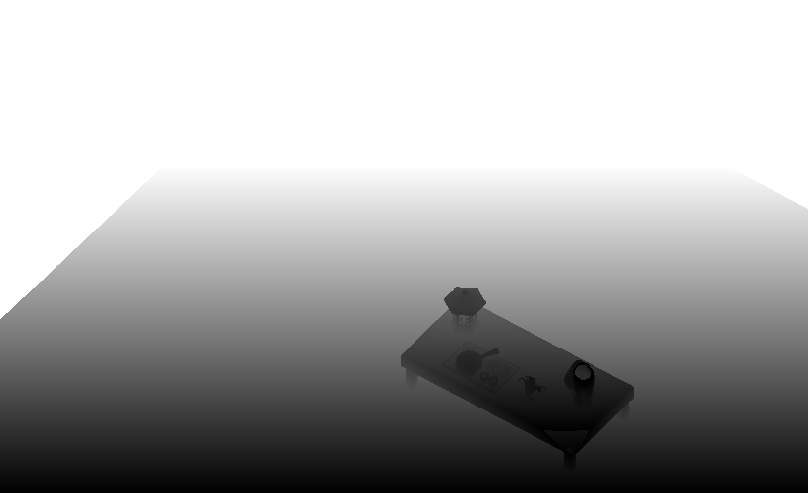

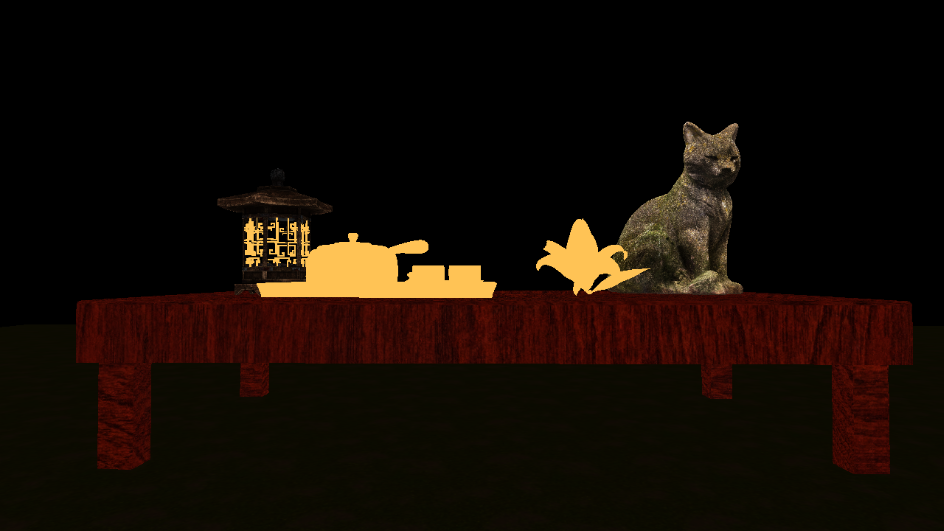

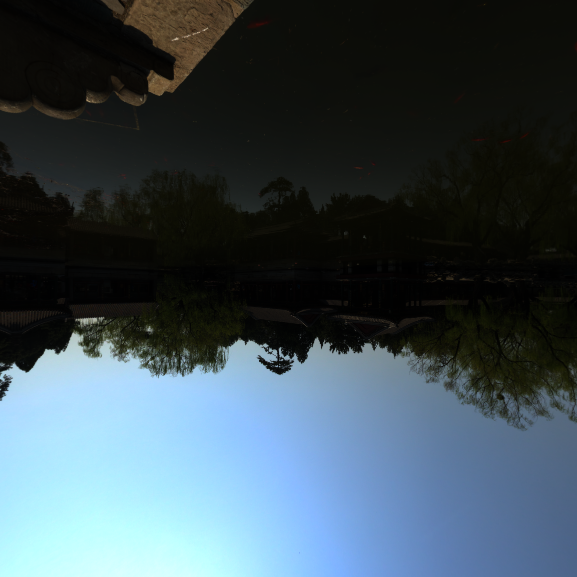

AO: to simulate object shadows in certain hollows

As seen above, the AO adds a depth effect to the object itself.

You can experiment on these three concepts on the flower in my scene by tweaking the values.

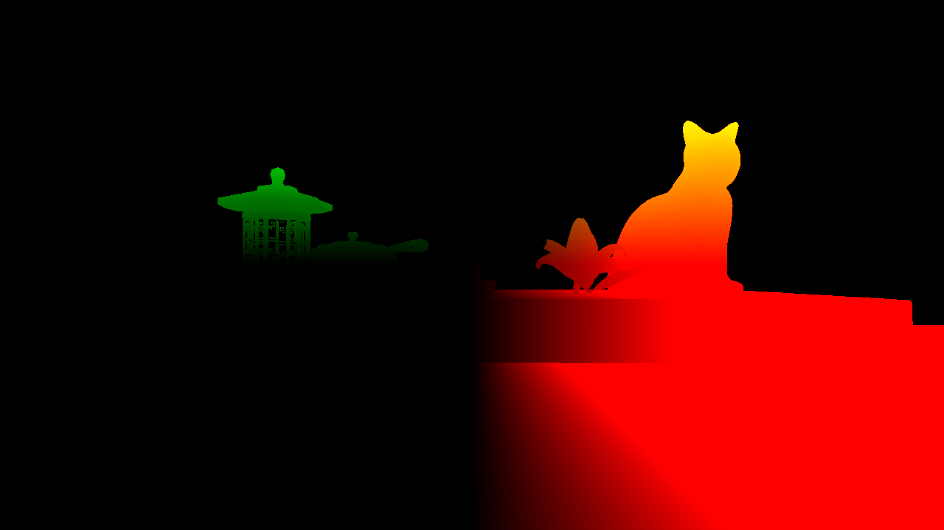

Roughness visual proprieties

Roughness visual proprieties

Metalic visual proprieties

Metalic visual proprieties

PBR also includes the principle of energy conservation.

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

// material properties

vec3 albedo = texture(gAlbedo, TexCoords).rgb;

float metallic = texture(gPosition, TexCoords).a;

float roughness = texture(gNormal, TexCoords).a;

float ao = texture(gAlbedo, TexCoords).a;

float ssao = texture(SSAOMap, TexCoords).r;

float combined_ao = ssao * ao;

// input lighting data

vec3 N = mat3(inverseViewMatrix) * texture(gNormal, TexCoords).rgb; ;

vec3 V = normalize(camPos - WorldPos);

vec3 R = reflect(-V, N);

// calculate reflectance at normal incidence;

vec3 F0 = vec3(0.04);

F0 = mix(F0, albedo, metallic);

// reflectance equation

vec3 Lo = vec3(0.0);

// calculate per-light radiance

vec3 L = normalize(-directionalLightDirection);

vec3 H = normalize(V + L);

//float distance = length(lightPositions[i] - WorldPos);

//float attenuation = 1.0 / (distance * distance);

vec3 radiance = directionalLightColor;

// Cook-Torrance BRDF

float NDF = DistributionGGX(N, H, roughness);

float G = GeometrySmith(N, V, L, roughness);

vec3 F = fresnelSchlick(max(dot(H, V), 0.0), F0);

vec3 numerator = NDF * G * F;

float denominator = 4.0 * max(dot(N, V), 0.0) * max(dot(N, L), 0.0) + 0.0001;

vec3 kS = F;

vec3 kD = vec3(1.0) - kS;

kD *= 1.0 - metallic;

float NdotL = max(dot(N, L), 0.0);

...

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~